Deploying Debezium on OpenShift

This procedure is for setting up Debezium connectors on Red Hat’s OpenShift container platform. For development or testing on OpenShift you can use CodeRady Containers.

To get started more quickly, try the Debezium online learning scenario. It starts an OpenShift cluster just for you, which lets you start using Debezium in your browser within a few minutes.

Prerequisites

To keep containers separated from other workloads on the cluster, create a dedicated project for Debezium.

In the remainder of this document, the debezium-example namespace will be used:

$ oc new-project debezium-exampleDeploying Strimzi Operator

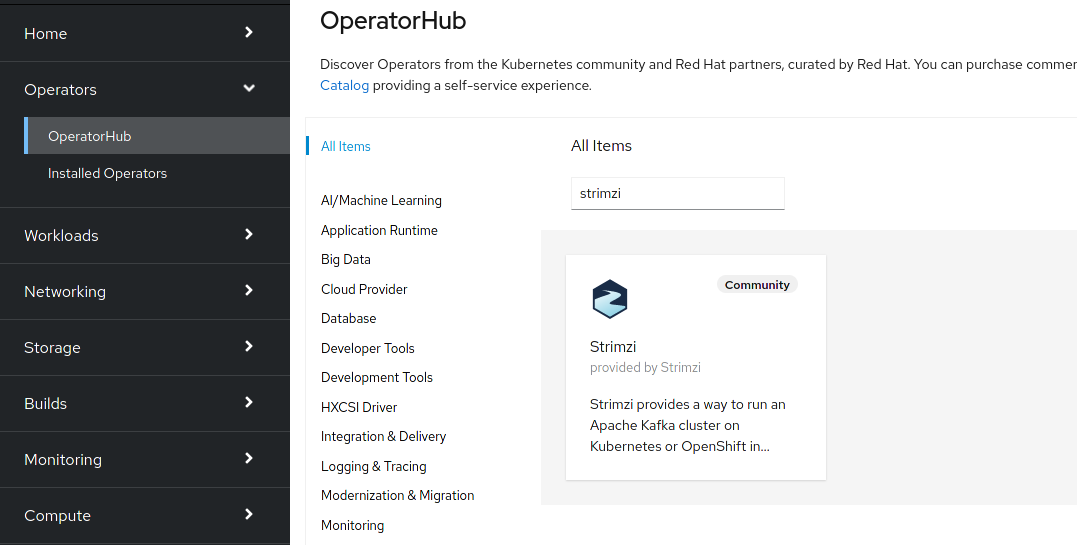

For the Debezium deployment we will use the Strimzi project, which manages the Kafka deployment on OpenShift clusters. The simplest way for installing Strimzi is to install the Strimzi operator from OperatorHub. Navigate to the "OperatorHub" tab in the OpenShift UI, select "Strimzi" and click the "install" button.

If you prefer command line tools, you can install the Strimzi operator this way as well:

$ cat << EOF | oc create -f -

apiVersion: operators.coreos.com/v1alpha1

kind: Subscription

metadata:

name: my-strimzi-kafka-operator

namespace: openshift-operators

spec:

channel: stable

name: strimzi-kafka-operator

source: operatorhubio-catalog

sourceNamespace: olm

EOFCreating Secrets for the Database

Later on, when deploying Debezium Kafka connector, we will need to provide username and password for the connector to be able to connect to the database.

For security reasons, it’s a good practice not to provide the credentials directly, but keep them in a separate secured place.

OpenShift provides Secret object for this purpose.

Besides creating Secret object itself, we have to also create a role and a role binding so that Kafka can access the credentials.

Let’s create Secret object first:

$ cat << EOF | oc create -f -

apiVersion: v1

kind: Secret

metadata:

name: debezium-secret

namespace: debezium-example

type: Opaque

data:

username: ZGViZXppdW0=

password: ZGJ6

EOFThe username and password contain base64-encoded credentials (debezium/dbz) for connecting to the MySQL database, which we will deploy later.

Now, we can create a role, which refers secret created in the previous step:

$ cat << EOF | oc create -f -

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: connector-configuration-role

namespace: debezium-example

rules:

- apiGroups: [""]

resources: ["secrets"]

resourceNames: ["debezium-secret"]

verbs: ["get"]

EOFWe also have to bind this role to the Kafka Connect cluster service account so that Kafka Connect can access the secret:

$ cat << EOF | oc create -f -

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: connector-configuration-role-binding

namespace: debezium-example

subjects:

- kind: ServiceAccount

name: debezium-connect-cluster-connect

namespace: debezium-example

roleRef:

kind: Role

name: connector-configuration-role

apiGroup: rbac.authorization.k8s.io

EOFThe service account will be create by Strimzi once we deploy Kafka Connect.

The name of the service account take form $KafkaConnectName-connect.

Later on, we will create Kafka Connect cluster named debezium-connect-cluster

and therefore we used debezium-connect-cluster-connect here as a subjects.name.

Deploying Apache Kafka

Next, deploy a Kafka cluster (deployed with 3 nodes via NodePool):

$ cat << EOF | oc create -n debezium-example -f -

---

apiVersion: kafka.strimzi.io/v1beta2

kind: KafkaNodePool

metadata:

name: debezium-cluster-node-pool

labels:

strimzi.io/cluster: debezium-cluster

spec:

replicas: 3

roles:

- broker

- controller

storage:

type: ephemeral

---

apiVersion: kafka.strimzi.io/v1beta2

kind: Kafka

metadata:

name: debezium-cluster

annotations:

strimzi.io/node-pools: enabled

strimzi.io/kraft: enabled

spec:

kafka:

version: "3.9.0"

listeners:

- name: plain

port: 9092

type: internal

tls: false

- name: tls

port: 9093

type: internal

tls: true

authentication:

type: tls

- name: external

port: 9094

type: nodeport

tls: false

config:

offsets.topic.replication.factor: 3

transaction.state.log.replication.factor: 3

transaction.state.log.min.isr: 2

default.replication.factor: 3

min.insync.replicas: 2

entityOperator:

topicOperator: {}

userOperator: {}

---

EOF-

Wait until it’s ready:

$ oc wait kafka/debezium-cluster --for=condition=Ready --timeout=300sDeploying a Data Source

As a data source, MySQL will be used in the following. Besides running a pod with MySQL, an appropriate service which will point to the pod with DB itself is needed. It can be created e.g. as follows:

$ cat << EOF | oc create -f -

apiVersion: v1

kind: Service

metadata:

name: mysql

spec:

ports:

- port: 3306

selector:

app: mysql

clusterIP: None

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql

spec:

selector:

matchLabels:

app: mysql

strategy:

type: Recreate

template:

metadata:

labels:

app: mysql

spec:

containers:

- image: quay.io/debezium/example-mysql:3.3

name: mysql

env:

- name: MYSQL_ROOT_PASSWORD

value: debezium

- name: MYSQL_USER

value: mysqluser

- name: MYSQL_PASSWORD

value: mysqlpw

ports:

- containerPort: 3306

name: mysql

EOFDeploying a Debezium Connector

To deploy a Debezium connector, you need to deploy a Kafka Connect cluster with the required connector plug-in(s), before instantiating the actual connector itself. As the first step, a container image for Kafka Connect with the plug-in has to be created. If you already have a container image built and available in the registry, you can skip this step. In this document, the MySQL connector will be used as an example.

Creating Kafka Connect Cluster

Again, we will use Strimzi for creating the Kafka Connect cluster.

Strimzi also can be used for building and pushing the required container image for us.

In fact, both tasks can be merged together and instructions for building the container image can be provided directly within the KafkaConnect object specification:

$ cat << EOF | oc create -f -

apiVersion: kafka.strimzi.io/v1beta2

kind: KafkaConnect

metadata:

name: debezium-connect-cluster

annotations:

strimzi.io/use-connector-resources: "true"

spec:

version: 3.1.0

replicas: 1

bootstrapServers: debezium-cluster-kafka-bootstrap:9092

config:

config.providers: secrets

config.providers.secrets.class: io.strimzi.kafka.KubernetesSecretConfigProvider

group.id: connect-cluster

offset.storage.topic: connect-cluster-offsets

config.storage.topic: connect-cluster-configs

status.storage.topic: connect-cluster-status

# -1 means it will use the default replication factor configured in the broker

config.storage.replication.factor: -1

offset.storage.replication.factor: -1

status.storage.replication.factor: -1

build:

output:

type: docker

image: image-registry.openshift-image-registry.svc:5000/debezium-example/debezium-connect-mysql:latest

plugins:

- name: debezium-mysql-connector

artifacts:

- type: tgz

url: https://repo1.maven.org/maven2/io/debezium/debezium-connector-mysql/{debezium-version}/debezium-connector-mysql-{debezium-version}-plugin.tar.gz

EOFHere we took the advantage of the OpenShift built-in registry, already running as a service on the OpenShift cluster.

|

For simplicity, we’ve skipped the checksum validation for the downloaded artifact.

If you want to be sure the artifact was correctly downloaded, specify its checksum via the |

If you already have a suitable container image either in the local or a remote registry (such as quay.io or DockerHub), you can use this simplified version:

$ cat << EOF | oc create -f -

apiVersion: kafka.strimzi.io/v1beta2

kind: KafkaConnect

metadata:

name: debezium-connect-cluster

annotations:

strimzi.io/use-connector-resources: "true"

spec:

version: 3.1.0

image: 10.110.154.103/debezium-connect-mysql:latest

replicas: 1

bootstrapServers: debezium-cluster-kafka-bootstrap:9092

config:

config.providers: secrets

config.providers.secrets.class: io.strimzi.kafka.KubernetesSecretConfigProvider

group.id: connect-cluster

offset.storage.topic: connect-cluster-offsets

config.storage.topic: connect-cluster-configs

status.storage.topic: connect-cluster-status

# -1 means it will use the default replication factor configured in the broker

config.storage.replication.factor: -1

offset.storage.replication.factor: -1

status.storage.replication.factor: -1

EOFYou can also note, that we have configured the secret provider to use Strimzi secret provider

Strimzi secret provider will create service account for this Kafka Connect cluster (and which we have already bound to the appropriate role),

and allow Kafka Connect to access our Secret object.

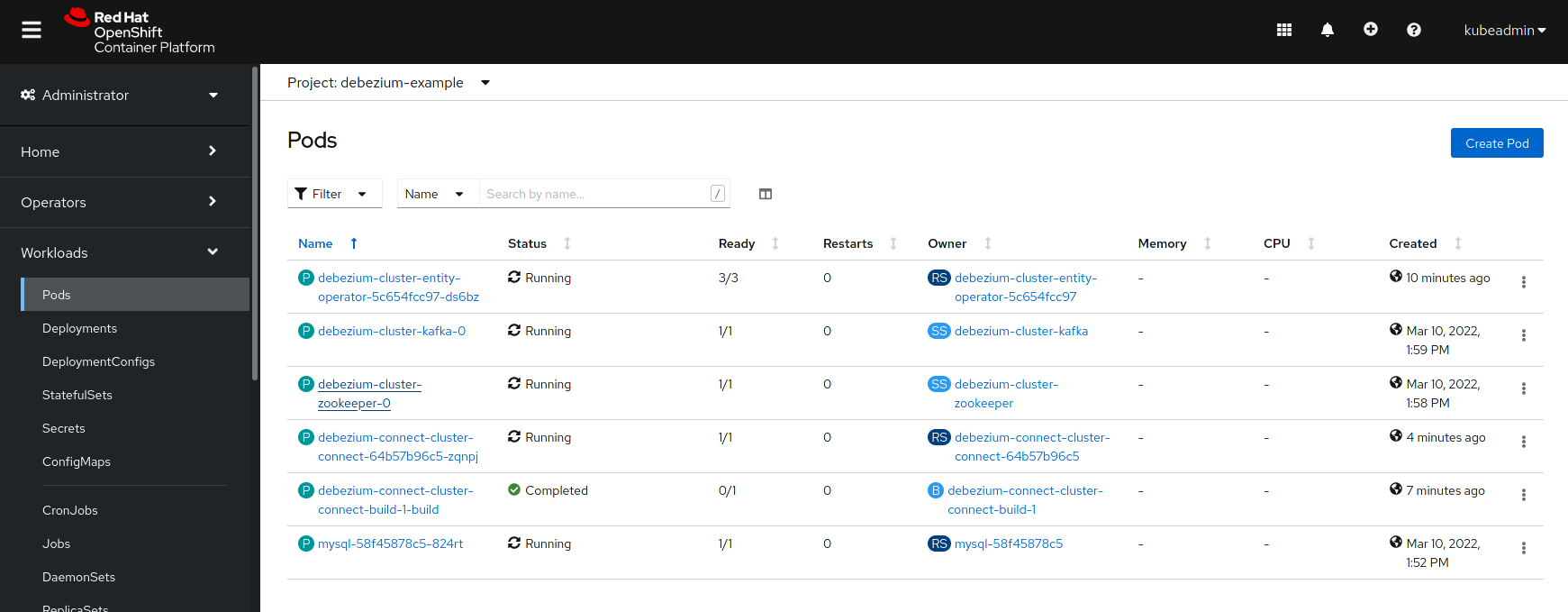

Before creating a Debezium connector, check that all pods are already running:

Creating a Debezium Connector

To create a Debezium connector, you just need to create a KafkaConnector with the appropriate configuration, MySQL in this case:

$ cat << EOF | oc create -f -

apiVersion: kafka.strimzi.io/v1beta2

kind: KafkaConnector

metadata:

name: debezium-connector-mysql

labels:

strimzi.io/cluster: debezium-connect-cluster

spec:

class: io.debezium.connector.mysql.MySqlConnector

tasksMax: 1

config:

tasks.max: 1

database.hostname: mysql

database.port: 3306

database.user: ${secrets:debezium-example/debezium-secret:username}

database.password: ${secrets:debezium-example/debezium-secret:password}

database.server.id: 184054

topic.prefix: mysql

database.include.list: inventory

schema.history.internal.kafka.bootstrap.servers: debezium-cluster-kafka-bootstrap:9092

schema.history.internal.kafka.topic: schema-changes.inventory

EOFAs you can note, we didn’t use plain text user name and password in the connector configuration,

but refer to Secret object we created previously.

Verifying the Deployment

To verify the everything works fine, you can e.g. start watching mysql.inventory.customers Kafka topic:

$ oc run -n debezium-example -it --rm --image=quay.io/debezium/tooling:1.2 --restart=Never watcher -- kcat -b debezium-cluster-kafka-bootstrap:9092 -C -o beginning -t mysql.inventory.customersConnect to the MySQL database:

$ oc run -n debezium-example -it --rm --image=mysql:8.2 --restart=Never --env MYSQL_ROOT_PASSWORD=debezium mysqlterm -- mysql -hmysql -P3306 -uroot -pdebeziumDo some changes in the customers table:

sql> update customers set first_name="Sally Marie" where id=1001;You now should be able to observe the change events on the Kafka topic:

{

...

"payload": {

"before": {

"id": 1001,

"first_name": "Sally",

"last_name": "Thomas",

"email": "sally.thomas@acme.com"

},

"after": {

"id": 1001,

"first_name": "Sally Marie",

"last_name": "Thomas",

"email": "sally.thomas@acme.com"

},

"source": {

"version": "{debezium-version}",

"connector": "mysql",

"name": "mysql",

"ts_ms": 1646300467000,

"ts_us": 1646300467000000,

"ts_ns": 1646300467000000000,

"snapshot": "false",

"db": "inventory",

"sequence": null,

"table": "customers",

"server_id": 223344,

"gtid": null,

"file": "mysql-bin.000003",

"pos": 401,

"row": 0,

"thread": null,

"query": null

},

"op": "u",

"ts_ms": 1646300467746,

"ts_us": 1646300467746178,

"ts_ns": 1646300467746178963,

"transaction": null

}

}If you have any questions or requests related to running Debezium on Kubernetes or OpenShift, then please let us know in our user group or in the Debezium developer’s chat.